Why DevOps is a hot buzzword

What do security, scalability, reliability, longevity, and quality have in common when it comes to web-based applications or websites?

All of these things are directly affected by something few business people think about - DevOps. You may have seen it mentioned since it is getting more attention recently, in part due to the large number of web applications and functional web sites and the business-critical nature of these applications.

DevOps stands for "Developer Operations" and it encompasses all the things your developers do (or should do) that don't relate to writing code and building features.

Some simple examples of DevOps would be:

- Keeping the servers up and running

- Monitoring downtime of servers

- Ensuring that deploying new features is painless, but can also be undone quickly if necessary

- Automatically monitoring for end-user errors

Why do managers need to understand DevOps? Put simply, DevOps covers all the processes that keep your application running and ensure that you can continue to enhance and grow functionality. While DevOps does include having a process for disaster recover, the real focus is on avoiding problems before they occur. It's also important to understand that DevOps isn't just a concern for major sites like Twitter and Instagram. Every business owner or manager that has responsibility for a web application or web site that is important to business success should understand the basics of DevOps.

What do managers need to know? Basically DevOps breaks down into several categories, and managers should understand what these categories mean, and how they are being handled for your applications. The basic categories are:

- How the servers are set up

- How code is handled

- How testing is handled

- How applications are monitored

- How servers are maintained

- How your application will handle scaling up

Server set up

The first thing to be aware of is where your servers live. In the case of most of our applications the servers are in Amazon's cloud: AWS. We chose AWS because it is the most avanced, reliable and mature "Infrastructure as a Service" (IaaS) provider. Other places your servers could live would be on another cloud provider (Microsoft Azure, Heroku), in a local hosting facility, or on your own servers. This matters because some of these options provide a rich environment that will do much of the work for you, where hosting it on your own or in a local hosting facility may mean that you (your developers) have to do more work to keep things running smoothly.

How code is handled.

Code is a pretty broad term, and covers everything from application code written in a programming language to assets such as CSS, and for the purpose of this discussion would also include static images and other assets that are bundled into a web application. All these assets need to live somewhere, and the normal place they live is in a source code control system. We typically use Git, and host files on their cloud service GitHub.com. There are several reasons why it's important to think about where the code lives. Besides the obvious things like controlling who has access to view and modify code, with modern deployment technology then the act of packaging, testing and deploying the code can be automated. This saves time and increases reliability of your applications.

When we speak of how the code is handled, we are talking about how it gets from a developer's local machine to your servers. At Shiny Creek this process is thoroughly automated. We leverage the much-vaunted CircleCI - a continuous integration tool - to make sure that the code first passes all the regression tests. Each time a developer checks in their code, CircleCI checks the code out of GitHub and runs all the test scripts, alerting developers if anything doesn't pass. We further use CircleCI to actually deploy the code to the servers. All features first move to the Development server, and then after customer acceptance it is moved to Staging and finally (less often) is deployed to the Production environment. This process involves have a good branching process (managing all the different paths the code moves through as a team is working on it) and having the ability to undo those changes in a systematic way if something is discovered that is causing an issue.

How testing is handled.

Everyone is familiar with basic application testing. When a new feature is added, it typically is deployed first to a testing or staging server, and a human being makes sure it is doing what was expected. Automated code testing is the industry-standard way to ensure that your application will continue to work as you enhance it and add more features. The automated tests are built in code. Some tests are used for checking things like application logic and calculations. In our case those are rspec tests - the unit tests used in Ruby on Rails. Other automated tests can actually mimic user behavior by simulating your front end. We use Capybara and similar tools to handle these types of tests. Regardless of how your team is handling testing, it is good idea to get them to explain the testing philosophy they are using and to get a comfort level with their ability to add new features without breaking existing functionality.

How applications are monitored

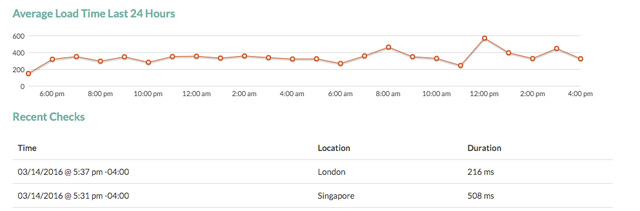

Modern web applications (and the servers that run them) can be monitored at several different levels to make sure they are operating correctly. The key things to monitor are uptime, performance, server health and error messages. There are many services that can do this automatically. At Shiny Creek we use tools like Honeybadger.io to monitor both uptime and error messages. With Honeybadger, uptime is monitored from multiple geographic locations and also provides round-trip times. Error messages mean anything that generated an error on the server, such as a user clicking on a feature that resulted in something unexpected.

Your error monitoring service should ideally do several things - inform the developers that an error occurred, tell them what the user was doing, and where in the code failure occurred. Ideally it will provide as much context for the error as possible. Server health refers to how close the server is to capacity, including memory usage, CPU usage, and storage. We use AWS Cloudwatch for this but there are many services that can provide this type of monitoring. Performance monitoring includes both understanding how quickly your application can handle user requests, and also what you can do to make this better. Performance monitoring is much more closely tied to the specifics of how an application is written, but a good architect working with modern tools can use them to pinpoint bottlenecks and determine how to help keep the application performing well.

How servers are maintained

This category covers things like basic upkeep of servers - cleaning out logs, applying patches, updating various software components. This work is somewhat less automated than the other categories, and requires having engineers that have a good understanding of the operating system. In the case of our servers the OS is Linux, usually a standard distribution such as Ubuntu. Many areas of upkeep can be automated, such as automatically applying security patches. The key thing for server maintenance is to have well-defined processes to ensure that this maintenance doesn't affect production servers in unplanned ways.

How your application will handle scaling up

This final category needs an article of it's own to adequately cover. The first thing that impacts scalability is the application stack, which describes the components that make up a web application. For example, our typical application might use Nginx as the web server, Passenger or Puma as the application server, Postgres database, and Sidekiq for asynchronous processes. A good architect should be able to explain to you what tools they are using for each area of the application stack and why. Scaling the application after it has been launched is generally a process of increasing the power of the physical or virtual servers themselves (vertical scaling) and adding new servers and distributing the load between them (horizontal scaling). There may be other reasons to use additional (horizontal) servers, such as providing geographically diverse servers to speed up performance across the country or world, and providing for backups and failover in the case of servers or connections going down. The key thing as a manager is to get the developers to explain in plain english:

- How will we scale up as traffic or load increases on the application?

- How do we handle servers going down? Will traffic automatically flow to other servers?

- How much will the current architecture scale? And do we have plans for what to do if that limit is lower than where we see traffic in the next few years?

This article provides just the basics for understanding DevOps. Realistically managers don't need to understand the technical side of how these different categories are handled, just that they are being looked at. One key point to emphasize is that each of these categories requires time (resources) to set up correctly. Finding the right balance for your specific application needs and making sure your people have time to implement the right processes and use the correct tools will save headaches in the long run and leave you with one less thing to worry about.